In recent years, artificial intelligence (AI) has emerged as a transformative force in healthcare, mainly through advancements in improved large language models (LLMs)*. If you are a clinician looking to implement AI into your practice, understanding the nuances of this technology is pivotal for navigating the evolving healthcare AI terrain.

Understanding the AI Spectrum

Artificial Intelligence (AI): Artificial intelligence refers to the simulation of human intelligence processes by machines, particularly computer systems. These processes include learning (acquiring information and rules for using that information), reasoning (using rules to reach approximate or definite conclusions), and self-correction.

Machine Learning (ML): Machine learning is a subset of artificial intelligence (AI) that involves the development of algorithms and statistical models that enable computers to learn from and make predictions based on data. In machine learning, algorithms are trained on data to identify patterns or make predictions without being explicitly programmed.

E.g.: Predicting the chance of patient readmission.

Deep Learning (DL): Deep learning is a subset of machine learning that uses artificial neural networks with multiple layers (hence the term “deep”) to learn representations of data. Deep learning algorithms can automatically discover patterns and features from raw data, enabling tasks such as image recognition, natural language processing, and speech recognition. Deep learning models work via a mathematical technique called back-propagation. Deep learning may be a path towards AI.

E.g.: Predicting a pneumothorax on a chest X-ray

Natural Language Processing (NLP): Natural language processing is a branch of deep learning that focuses on interacting with computers and humans through natural language. It involves tasks such as language understanding, language generation, sentiment analysis, and machine translation.

E.g.: Building a summary of a doctor-patient conversation based on a transcribed version of their conversation.

Large Language Models (LLMs): Large language models are a type of deep learning model trained on vast amounts of text data to understand and generate human-like text. These models, such as GPT (Generative Pre-trained Transformer), have been trained on diverse internet text to perform various natural language processing tasks, including text generation, translation, summarisation, and question answering.

E.g.: Medical chatbot

Generative AI: Generative AI refers to artificial intelligence / deep learning systems or models that can create new data instances, such as images, text, audio, or CT scans, that resemble those found in the training data. Generative AI has applications in creative fields like art generation, text generation, and content creation.

E.g.: A Generative AI model could generate CXRs but with anomalous structures or implausible pathologies.

One of the significant challenges of Generative AI is hallucination. It refers to the phenomenon where the model generates data instances (images, sentences, etc.) that do not accurately represent real-world examples or are highly unrealistic. It occurs when the generative model produces outputs that contain artifacts, inconsistencies, or improbable features not present in the training data. This case indicates the model needs a more real/grounded understanding of the data present in the training set.

Navigating the Maze:

Here are some pointers to consider when considering an AI for clinical use.

- Purpose and Context: Clearly understand the algorithm’s intent and context and leverage it for the intended purposes only.

- Data Quality: Evaluating the accuracy, bias, labelling correctness, and standardisation of training data.

- Performance: Assessing how well the algorithm performs.

- Transferability: Determining if the algorithm is adaptable to new clinical settings.

- Regulatory Clearances: Ensuring the algorithm has obtained necessary regulatory approvals such as FDA, CE, and TGA and is cleared for use in your country.

- Privacy Compliance: Confirming compliance with all privacy regulations.

annalise.ai’s AI portfolio

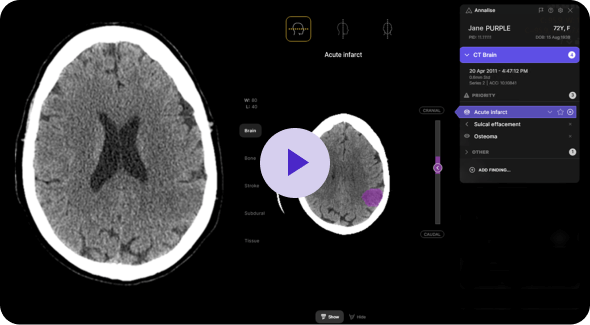

While some vendors rely on AI algorithms trained on labels derived from NLP-extracted reports or labels generated by radiographers and medical graduates, annalise.ai takes a different, more rigorous approach. We collaborated with expert radiologists to meticulously create over 280 million labels. Each study underwent independent hand-annotation by at least three qualified radiologists, ensuring the precision and sensitivity of our algorithms.

This commitment to accuracy extends to our reporting tool. Draft reports are only as good as the underlying solution. Therefore, our reporting tool, Annalise Reporting, stands out by offering reliability and depth. While narrow AI solutions are limited to generating specific reports, our comprehensive approach allows for creating thorough draft reports covering a wide range of findings.

Annalise Reporting is grounded in a foundation of 124 chest X-ray findings and 130 findings on non-contrast CT brain studies, enabling the generation of detailed reports. According to Dr Aengus Tran, co-founder of Annalise AI, “The beauty with this approach is that we can generate hallucination-free reports because they are grounded in the layers of regulatory-cleared diagnostic findings.”

Striking the Balance

For clinicians, leveraging AI in their clinical practice is more important than ever. However, understanding the differences between various Healthcare AI is imperative. While AI holds immense potential, embracing solutions that meet stringent criteria for transparency, accuracy, and regulatory adherence ensures a path that aligns with ethical and practical considerations.