Author: Minta Chen, Head of Quality Assurance and Regulatory Affairs, annalise.ai

Implementing an AI imaging system involves a significant investment of time, energy and capital. You, therefore, want to be certain that your choice of solution is the best one possible. But with the vast range of providers and products on the market, you may be wondering where to start.

Fortunately, there are some key signposts you can follow when making your decision. Here are three crucial things to keep in mind to help ensure your chosen solution meets your needs both now and well into the future.

Question 1: What do I NEED the solution to do for me?

The first thing you need to consider is why you are choosing to invest in a medical imaging AI solution. There may be several reasons, but it is crucial to get clear on the primary one. This will make it easier to narrow down your search and make the decision.

The most important factor is usually the intended use of the device. Pay special attention to the indications for use to ensure they match your needs. It is also essential to understand how the device will impact your clinical workflow and how easy it is to use.

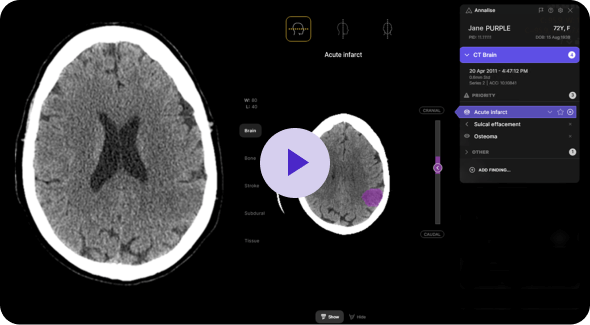

Annalise Triage, for example, is specifically configured for US clinical practice. Under current standard practice, after being sorted by the referring physician’s priority, exams are further organized based on service level agreements (SLA) or a first-in, first-out (FIFO) approach. This means that patients with critical, time-sensitive conditions, many of whom may be asymptomatic, can end up waiting for hours in the queue. This is where integrating AI becomes indispensable. Annalise Triage can reliably and accurately prioritize time-sensitive findings in chest X-rays and non-contrast head CT scans. By integrating Annalise’s FDA-cleared solution, healthcare professionals gain valuable support. Studies containing any of the 12 time-sensitive findings are promptly flagged, allowing for the reordering of the worklist to review patients with critical findings first. As a vendor-agnostic solution, it integrates easily with both PACS and RIS systems.

Question 2: Is Sensitivity/Specificity data adequate to assess the performance of the software?

It is crucial to assess the solution’s performance. Clearly, you want a solution that detects the findings it claims to with a high degree of accuracy. You also want it to only detect findings that are actually present.

Metrics that can help you compare the performance of different devices include:

- Area under the curve (AUC) – provides a quantitative measure of the software’s ability to discriminate between different conditions or classes. A higher AUC value generally indicates better accuracy in distinguishing between normal and abnormal findings in images.

- The Sensitivity of a solution is the percentage of positive results that are correct.

- The Specificity is the percentage of negative cases that the software correctly identifies as negative.

- Similarly, keep in mind the prevalence of a disease or an abnormality in your setting.

For instance, the Annalise Triage solution has an AUC of 0.979 for pneumothorax. It detects pneumothorax with a sensitivity of 94.3%, which means the solution detects approximately 94% of pneumothorax cases. It has a specificity of 92.0%, which means it correctly detects roughly 92% of cases negative for pneumothorax.

Some other factors to keep in mind are the available operating thresholds and support for varying slice thickness. In today’s market, CT scanners are commonly available with slice counts such as 16, 32, 40, 64, and 128, while less common ones offer higher counts of up to 256 and 320 slices. These varying slice counts serve various purposes in CT scans, catering to different clinical scenarios.

Therefore, it is essential to select an AI solution that can function effectively across different slice thicknesses and within various thresholds to accommodate the diverse needs of these procedures.

Question 3: What long-term support for software service and maintenance will you receive?

It’s essential to evaluate the level of post-purchase support and service available to you. Investing in an AI tool is not like making a one-off product purchase. Algorithms are regularly being updated in response to new clearances. Top-quality maintenance and service protocols are, therefore much more than a nice-to-have – they are essential for continued safe, reliable, and optimal software use.

Look for a reputable AI provider who offers long-term support and frequent updates to help protect the safety of your data and solution against cybersecurity threats. Annalise aims to release quarterly updates to include new features, fix bugs and address new cybersecurity risks in the software.

Annalise Triage is built on the same comprehensive AI model that detects up to 124 CXR and 130 CTB findings. This means we have a strong pipeline of findings to be cleared through the FDA. Once cleared, these findings can be turned on with the existing installation. Compared to single-finding solutions, which require a new software installation should they add new cleared findings, this will minimize workflow disruption and save clinics precious time and resources.

References:

- Hillis JM, Bizzo BC, Mercaldo S, et al. Evaluation of an Artificial Intelligence Model for Detection of Pneumothorax and Tension Pneumothorax in Chest Radiographs. JAMA Netw Open. 2022;5(12):e2247172. doi:10.1001/jamanetworkopen.2022.47172

- James M Hillis, Bernardo C Bizzo, Isabella Newbury-Chaet, Sarah F Mercaldo, John K Chin, Ankita Ghatak, Madeleine A Halle, Eric L’Italien, Ashley L MacDonald, Alex S Schultz, Karen Buch, John Conklin, Stuart Pomerantz, Sandra Rincon, Keith J Dreyer, William A Mehan. Evaluation of an artificial intelligence model for identification of intracranial hemorrhage subtypes on computed tomography of the head. medRxiv 2023.09.07.23295189; doi: https://doi.org/10.1101/2023.09.07.23295189

- Seah, Jarrel C Y et al. Effect of a comprehensive deep-learning model on the accuracy of chest x-ray interpretation by radiologists: a retrospective, multireader multicase study. The Lancet Digital Health, Volume 3, Issue 8, e496 – e506